Pro Tips: Run Multiple Jenkins CI Servers on a Single Machine

Jenkins (or Hudson if you work for Oracle) is a great, simple and steady continuous integration and build server. One of its greatest features is that despite being packaged as a standard .war ready to be dropped into your JEE web container of choice … it also contains an embedded web container that makes the .war everything you need in most situations. Simply run

java -jar jenkins.war

and up starts Jenkins on port 8080 in all of its glory. All of Jenkins’ configuration files, plugins, and working directories go under <USER_HOME>/.jenkins by default. Perfect.

Except if you want to run multiple instances on the same machine. We are going to get a config folder collision. It turns out this is a piece of cake, but the docs are hard to find. Jenkins will use a JENKINS_HOME path environement variable for its configuration files if one is set so a simple change means we can run Jenkins out of any directory we desire:

java -DJENKINS_HOME=/path/to/configs -jar jenkins.war

What about the port you say? Winston, the embedded web container used, has a simple property for this too:

java -DJENKINS_HOME=/path/to/configs -jar jenkins.war --httpPort=9090

QUnit-CLI: Running QUnit with Rhino

Previously I talked about wanting to run QUnit outside the browser and about some issues I ran into. Finally, I have QUnit running from the command line: QUnit-CLI

Previously I talked about wanting to run QUnit outside the browser and about some issues I ran into. Finally, I have QUnit running from the command line: QUnit-CLI

After a good deal of hacking and a push from jzaefferer, I gotten the example code in QUnit-CLI to run using Rhino and no browser in sight. This isn’t a complete substitute for in-browser testing, but makes integration with build servers and faster feedback possible.

/projects/qunit-cli/js suite.js PASS - Adding numbers FAIL - Subtracting numbers PASS|OK|subtraction function exists| FAIL|EQ|Intended bug!!!|Expected: 0, Actual: -2 PASS - module without setup/teardown (default) PASS - expect in testPASS - expect in test PASS - module with setup PASS - module with setup/teardown PASS - module without setup/teardown PASS - scope check PASS - scope check PASS - modify testEnvironment PASS - testEnvironment reset for next test PASS - scope check PASS - modify testEnvironment PASS - testEnvironment reset for next test PASS - makeurl working PASS - makeurl working with settings from testEnvironment PASS - each test can extend the module testEnvironment PASS - jsDump output PASS - raises PASS - mod2 PASS - reset runs assertions PASS - reset runs assertions2 ---------------------------------------- PASS: 22 FAIL: 1 TOTAL: 23 Finished in 0.161 seconds. ----------------------------------------

The first hurdle was adding guards around all QUnit.js’s references to setTimeout, setInterval, and other browser/document specific objects. In addition I extended the test.js browser checks to include all of the asynchronous tests and fixture tests. Finally I cleaned up a bit of the jsDump code to work better with varying object call chains. My alterations can be found on my fork here.

The second hurdle was getting QUnit-CLI using my modified version of QUnit.js and adjusting how Rhino errors are handled. Adding a QUnit submodule to the QUnit-CLI git repository easily fixed the first (I previously posted my notes on git submodules and fixed branches). QUnit.js’s borrowed jsDump code is used to “pretty-print” objects in test messages. jzaefferer ran into an issue when running QUnit’s own tests through QUnit-CLI resulting in the cryptic error:

js: "../qunit/qunit.js", line 1021: Java class "[B" has no public instance field or method named "setInterval". at ../qunit/qunit.js:1021 at ../qunit/qunit.js:1002 at ../qunit/qunit.js:1085 at ../qunit/qunit.js:1085 at ../qunit/qunit.js:1085 at ../qunit/qunit.js:110 at ../qunit/qunit.js:712 (process) at ../qunit/qunit.js:304 at suite.js:84

It turns out that errors objects (e.g. ReferenceError) throw in Rhino include an additional property of rhinoException which points to the underlying Java exception that was actually thrown. The error we saw is generated when the jsDump code walks the error object tree down to a byte array off of the exception. Property requests executed against this byte array throw the Java error above, even if they are done part of a typeof check, e.g.

var property_exists = (typeof obj.property !== 'undefined');

Once I figured this out, I wrapped the object parser inside QUnit.jsDump to properly pretty-print error objects and delegate to the original code for any other type of object.

...

var current_object_parser = QUnit.jsDump.parsers.object;

QUnit.jsDump.setParser('object', function(obj) {

if(typeof obj.rhinoException !== 'undefined') {

return obj.name + " { message: '" + obj.message + "', fileName: '" + obj.fileName + "', lineNumber: " + obj.lineNumber + " }";

}

else {

return current_object_parser(obj);

}

});

...

With these changes we have a decent command line executable test suite runner for QUnit. With a bit more work QUnit-CLI will hopefully be able to print Ant/JUnit style XML output and/or include stack traces when errors bubble out of test code.

Mocking frameworks are like cologne

Mocking frameworks are like cologne …

they help make your tests smell better, but they can cover up some really stinky code.

I was working my way through some old bookmarked blog posts when I ran across Decoupling tests with .NET 4 by Mr. Berridge and it reminded of a discussion I had a few weeks back while teaching a refactoring workshop. The discussion centered around the question: When should I use a mocking framework and when should I use real objects or hand-coded stubs?

*** I will leave the questions of the differences between stubs & mocks and the merits of testing in true isolations versus using real objects for another day ***

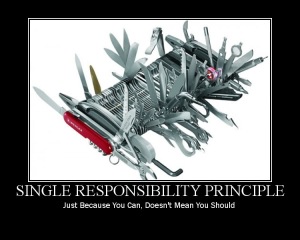

Mocking frameworks like Mockito or Moq are great tools, but lately I have been finding myself using them less and less. I have found that the more I use mocking frameworks the more easily I break the Single Responsibility Principle. Now, this obviously isn’t the fault of the tool. It is my fault. And my pair’s. The real problem is that we grew accustomed to using the tool and began to lean on it as a crutch. As we added more features our code took on more and more responsibilities; but we didn’t feel the pain directly because Mockito was doing more and more of the heavy lifting.

Mocking frameworks like Mockito or Moq are great tools, but lately I have been finding myself using them less and less. I have found that the more I use mocking frameworks the more easily I break the Single Responsibility Principle. Now, this obviously isn’t the fault of the tool. It is my fault. And my pair’s. The real problem is that we grew accustomed to using the tool and began to lean on it as a crutch. As we added more features our code took on more and more responsibilities; but we didn’t feel the pain directly because Mockito was doing more and more of the heavy lifting.

In Kevin’s post, he specifically talks about TDDing an object with many dependencies and the pain of updating each test as new dependencies were added. Now, I have a ton of respect for Kevin and his code … but I wonder if the another solution to his problem would be to re-examine why the object needed so many dependencies. Is it doing too much? Could these dependencies be re-described and possibly combined into the overall roles they fulfill?

Kevin’s solution was clever and a nice tip to keep in my pocket. The devil is in the details. As I try to “follow the pain” I am trying to write more hand-coded stubs and evaluate any time I find myself relying on side-effects to verify how my code works. Wish me luck.

QUnit and the Command Line: One Step Closer,

Previously I talked about getting QUnit JavaScript tests running on the command line using a simple Rhino setup. Hacking a few lines together meant that we could run our tests outside the constraints of a browser and potentionally as part of an automated build (e.g. Hudson). We had just one big problem: QUnit can run outside of a browser, but it still makes LOTS of assumptions based on being in a browser.

Previously I talked about getting QUnit JavaScript tests running on the command line using a simple Rhino setup. Hacking a few lines together meant that we could run our tests outside the constraints of a browser and potentionally as part of an automated build (e.g. Hudson). We had just one big problem: QUnit can run outside of a browser, but it still makes LOTS of assumptions based on being in a browser.

Foremost was the fact that the QUnit.log callback passed back HTML in the message parameter. I wasn’t the only one to catch onto this issue (GitHub issue 32). While I was still formulating a plan of attack to refactor out all of the browser oriented code, Jörn Zaefferer was putting a much simpler fix into place. His commit added an additional details object to all QUnit.log calls which will contains the original assertion message w/o HTML and possibly the raw expected and actual values (not yet documented on the main page). Problem solved!

Or so it seemed.

As I tried to hack my example CLI test runner to use the quick fix I ran into several issues.

Core QUnit logic still includes browser assumptions

Even with changes to wrap browser calls and guard against assuming certain browser objects existing, qunit.js is full of browser code. It would be great if the core unit testing code could be separated by the code necessary to execute properly within a browser page and separate from how test results should be displayed on an HTML page. If these three responsibilities were found in 3 different objects, it would be simple to replace one or more with ones that fit the scenario much more closely without needing to resort to hacks or breaking backward compatibility.

Lifecycle callbacks break down outside of a browser

QUnit has some really nice lifecycle callbacks which anyone needing to integrate a testing tool can use. They include callbacks for starting and finishing each test, individual assertions, and the whole test suite. The first thing I wanted to add was reporting of the total number of passing and failing tests along with execution time when the tests were all done. This looked like a simple job for QUnit.begin and QUnit.done.

It turns out that QUnit.begin won’t get called unless there is a window “load” event …. which doesn’t happen in Rhino …. so that is out. To make matters worse, QUnit.done is getting called twice! For each test! This means that my “final” stats are spammed with each test run. With help of Rhino’s debugger app, I saw that the culprit were the successive “done” calls near the end of the “test” function. Not sure how to fix that yet.

“Truthiness” in JavaScript is a double edged sword

Most of the time it is great not having to worry about if a value is really “False” or if it is undefined or some other “falsey” value. Only one problem, zero (0) is falsey too. Going back to my C days this isn’t too big of a deal (and can be used in clever ways). However, if you are checking for the existence of properties of an object by doing a boolean expression … don’t. Sure, undefined is falsey and if an object doesn’t have a property it will return undefined … but what if the value of that proeperty really IS undefined or in my case zero. No good.

var foo = {cat: "dog", count: 0 }

!!foo.cat // true

!!foo.bar // false (doesn't exist, returns undefined)

!!foo.count // false (0 is falsey)

** The double bang (!!boolean) convention is a convienent way for converting between a falsey or truthy value to the explicit values TRUE and FALSE

Unit test frameworks should standardize the order of arguments!

This is a personal pet peeve. I wish all unit testing framework would standardize whether the EXPECTED value or the ACTUAL value should go first on equals assertions. JUnit puts expected first. TestNG puts it second. QUnit puts it second. This is damn confusing if you have to switch between these tools frequently.

I moved my current code to a new GitHub repo -> QUnit-CLI. As I learn more and find a better solution, I will keep this repo updated. Currently the code outputs one line for each passing test and a more detailed set of lines for each failing test (including individual assertion results). Because of the QUnit.done problem above, the “final” test suite results are shown twice for each test (making them not very “final”) which shows the total test results and execution time. [Edited to have correct link]

~~~~~~~~~~

Side Note: As an end goal, I would like to build a CLI for QUnit that will output Ant+JUnit style XML output which would make integrating these test results with other tools a piece of cake. I CAN’T FIND THE XML DOCUMENTED ANYWHERE! Lots of people have anecdotal evidence of how the XML will be produced but no one seems to have a DTD or XSD that is “official”. If anyone knows of good documentation of the XML reports Ant generates for JUnit runs please let me know. Thanks.

~~~~~~~~~~

λ This post has nothing to do with Lisp or Clojure λ

Make JavaScript tests part of your build: QUnit & Rhino

I know some zealous Asynchronites who don’t believe code exists unless:

- it has tests

- code and tests are in source control

- tests are running on the continuous integration (CI) build

Sound crazy? If so, that is my kind of crazy.

Lately I have been hacking around a lot with JavaScript (HTML5, NodeJS, etc…) and was asked by another team if I had any suggestions on testing JavaScript and how they could integrate it into their build. Most of my experience testing JavaScript has been done by executing user acceptance tests written with tools like Selenium and Cucumber (load a real browser and click/act like a real user). Unfortunately these tests are slow and brittle compared to our unit tests. More and more of today’s dynamic web apps have large amounts of business logic client side that doesn’t have a direct dependency on the browser. What I want are FAST tests that I can run with every single change, before every single commit, and headless on our build server. They might not catch everything, but they will catch a lot long before the UATs are finished.

Goal : Run JavaScript unit tests as part of our automated build

The first hurdle was finding a way to run the code headless outside of a browser. Several of today’s embedded JavaScript interpreters are available as separate projects but for simplicity I like Rhino. Rhino is an interpreter written entirely in Java and maintained by the Mozilla Foundation. Running out of a single .jar file and with a nice GUI debugger, Rhino is a good place to start running JavaScript from the command line. While not fully CommonJS compliant yet, Rhino offers a lot of nice native functions for loading other files, interacting with standard io, etc. Also, you can easily embed Rhino in Java apps (it is now included in Java 6) and extended with Java code.

My JS testing framework of choice lately has been JSpec which I really like: nice GUI output, tons of assertions/matchers, async support, Rhino integration, and really nice r-spec-esque grammar. Unfortunately JSpec works best on a Mac and the team in question needs to support Windows/*nix mainly.

Enter QUnit: simple, fast, and used to test JQuery. Only one problem, QUnit is designed to run in a browser and gives all of its output in the form of DOM manipulations. Hope was almost lost when I found a tweet by John Resig himself that suggested that QUnit could be made to work with a command line JavaScript interpreter. Sadly despite many claims of this functionality, I couldn’t find a single good tutorial which showed me how nor a developer leveraging this approach. A bit of hacking, a lucky catch while reading the Env.js tutorial, and twada’s qunit-tap project came together in this simple solution:

Step 1 : Write a test (myLibTest.js)

Create a simple test file for a even simpler library function.

test("Adding numbers works", function() {

expect(3);

ok(newAddition, "function exists");

equals(4, newAddition(2, 2), "2 + 2 = 4");

equals(100, newAddition(100, 0), "zero is zero");}

);

Step 2 : Create a test suite (suite.js)

This code could be included in the test file, but I like to keep them separate so that myLibTest.js could be included into a HTML page for running QUnit in normal browser mode without making any changes.

This file contains all of the Rhino specific commands as well as some formatting changes for QUnit. After loading QUnit we need to a do a bit of house work to setup QUnit to run on the command line and override the log callback to print our test results out to standard out. QUnit offers a number of callbacks which can be overridden to integrate w/ other testing tools (find them on the QUnit home page under “Integration into Browser Automation Tools”) Finally load our library and our tests. [EDIT: ADD]

load("../qunit/qunit/qunit.js");

QUnit.init();

QUnit.config.blocking = false;

QUnit.config.autorun = true;

QUnit.config.updateRate = 0;

QUnit.log = function(result, message) {

print(result ? 'PASS' : 'FAIL', message);

};

load("myLib.js");

load("myLibTest.js");

Step 3 : Write the code (myLib.js)

function newAddition(x, y) {

return x + y;

}

Step 4 : Run it

Running Rhino is piece of cake but I am VERY lazy so I created a couple of aliases to make things dead simple. (taken from the Env.js tutorial, jsd runs the debugger)

export RHINO_HOME="~/development/rhino1_7R2" alias js="java -cp $RHINO_HOME/js.jar org.mozilla.javascript.tools.shell.Main -opt -1" alias jsd="java -cp $RHINO_HOME/js.jar org.mozilla.javascript.tools.debugger.Main"

Once the alias are created, simple run the suite

> js suite.js > PASS function exists > PASS <span>2 + 2 = 4</span>, expected: <span>4</span> > PASS <span>zero is zero</span>, expected: <span>100</span> >

Step 5 : Profit!

There you go, command line output of your JavaScript unit tests. Now we can test our “pure” JavaScript which doesn’t rely on the DOM. Using a tool like Env.js this is also possible and will be discussed in a future post. [EDIT: ADD]

Step 5+ : Notice the problem -> HTML output on the command line

You may have noticed that my output messages include HTML markup. Sadly QUnit still has assumptions that it is running in a browser and/or reporting to an HTML file. Over the next couple weeks I am going to work on refactoring out the output formatting code from the core unit testing logic and hopefully build out separate HTML, human readable command line, and Ant/JUnit XML output formatters so that integrating QUnit into your build process and Ant tasks is a piece of cake.

Track my progress on my GitHub fork and on the main QUnit project issue report.

[EDIT] I have posted about some of my progress and setbacks here.

Test-infected Marshmallows and Being a Passionate Professional

Alex Miller presented a talk at his recent Strange Loop conference about Walter Mischell’s marshmallow experiments. Mischell studies children’s ability to defer gratification when they were put in a room with a marshmallow and told they would receive another one in ~20 minutes if they didn’t eat the first. The longer term study found that kids who were able to wait, scored much higher in “life metrics” later on (e.g. work success, school studies, etc).

Alex’s talk got me thinking about deferring gratification in software development …. which imediatley made me think about testing.

In college, there were no tests. Just me, alone, with lots of code. And, there were lots of headaches and confusion. Fast.

Now I write tests and consider the behavior of my system before I write a single line of code. Now every line of code was written with a pair. After every iteration the team gets together to discuss how we are doing and how we can do it better. There are some headaches but a lot less confusion. Now there is pride and quality.

Having the discipline of writing tests first, and developing software in thin vertical slices from the top down is hard. It is hard because we desire closure, and immediate feedback. But by focusing our energy on producing a long term quality product, we can achieve a higher level of satisfaction.

Being surrounded with that many passionate professionals makes you want to be better.

A huge thanks to Alex for putting on a great conference here in St. Louis. I can’t wait for next year.

Cucumber: Making UATs the healthy choice

My current project team has been eating its vegetables. Has yours?

Selenium is a great tool for testing webapps from a true browser driven user’s perspective. By driving most mainstream browsers via a core Javascript library, a Selenium script can easily test how your app behaves (or misbehaves) just like a real user would see.

“Webrat lets you quickly write robust and thorough acceptance tests for a web application.” Written in Ruby, Webrat allows acceptance tests to exercise an arbitrary web application through its Selenium integration, or Rails apps without starting a server.

Cucumber allows code to be written in a simple domain specific language (DSL) that anyone can read. Layer Cucumber on top of Webrat and Selenium, and you have User Acceptance Tests (UATs) that the client can read (and ideally write), that the entire development team understands, and which can be run as BDD or regression tests.

Without going into the details of how each of these libraries work, below are some of my notes, tips, and impressions of using Cucumber to write UATs for a greenfield Java EE application over the past ~10 weeks.

- Ideally, each test would run in its own transaction so that they wouldn’t interfere with each other and could be run in parallel. Using Cucumber (Ruby) to test a totally separate web application running on Tomcat (Java) presents a problem. The team debated several possible solutions using JRuby and having the Cucumber build spawn an embedded Tomcat instance but decided that the cost outweighed the benefit to the current project.

- Since we were forced to use pure JDBC by our client, we decided to use ActiveRecord migrations to build our tables, and generate SQL which we could re-leverage from our Cucumber tests. Custom creation Webrat steps were written to push setup test data for each model.

- DB2 support with the Ruby ActiveRecord drivers is lacking proper install instructions on Linux (damn thing requries a full DB2 install to run). By leveraging JRuby, we were able to use the DB2 JDBC drivers instead which worked much better (without installing DB2). Getting Cucumber, Webrat, and Selenium running in JRuby took a bit of finesse and shebang wrangling but eventually worked.

- Outputting Cucumber results to a JUnit style XML output made our integration on our Hudson CI server simple and easy to read. (The Chuck Norris plugin helps too)

- We tag each test with a story number (e.g. @10045) so that we can quickly run all tests for a given feature easily: cucumber –tags @10045.

- Our QA lead works to define and write-up our Cucumber tests during pre-iteration planning and before each story enters our work queue (the team uses a modified Kanban board approach to pull work through each iteration, Cucumber is our first queue). When she has a test written, she will tag it with @in-process to let us know that it is ready to be worked on, but not yet implemented.

- We have custom Rake tasks to run all of our “finished” tests as well as just our “in-process” ones. The in-process task will fail if any tests pass (they should be marked as finished). Unfortunately this rake task doesn’t work with the Hudson/JUnit build.

- Ongoing issues we still struggle with are:

- making sure that small variations in DSL verbiage don’t muddy up our tests (it is easy for the team to accidentally end up with two different commands which do the same or similar actions without a clear distinction)

- cross browser and separate environment testing happens on different dedicated servers on dependent builds so that they don’t end up stepping on each other

- as it is with any testing library, care needs to be taken to keep only common tests grouped together and setup/background work common to all tests that NEED it. Pushing too much work into common actions results in slow tests

- Tests are supremely faster and more valuable than manual tests, but are not as fast as JUnit tests and will never replace manual exploratory testing.

Overall the team has been very pleased with our ability to drive our development from the tests and verify that new changes don’t break existing functionality from the users’ perspectives.

* Another Asynchrony team is working on leveraging Cuke4Duke to do similar testing of a Java thick client. Should be interesting.

Edit: Thanks to Amos King for some proof reading help. You can find his blog over at Dirty Information.

using a different compiler target for maven tests

Recently I ran into a problem.

My team has been tasked with writing a library to help with automated testing within the client’s organization. Great. Since the team’s who are going to use our code use Maven, it was only fitting for us to release our library as a simple Maven artifact into the corporate Maven repository and let them pull it down from there. Everything was going smoothly until we actually tried to get someone to use it. Evidently since the project team’s are deploying their code to WebSphere 6.0, they are using Java 1.4. Our testing library uses TestNG and required Java 5. Crap.

Since only the tests required Java 5 and they weren’t going to be deployed this shouldn’t be an issue in theory. The problem came in the form of Maven. Our initial plan was to have the project team testers fully integrate their UATs into the project’s build, placing their tests along side any other unit/integration tests the team could muster. The developer and build servers had Java 5 installed, so the only real trick was convincing Maven to compile and execute the tests using Java 5, but compile and package the product code using Java 1.4.

** A good friend and coworked pointed out that we should make sure that the “code” tests area compiled and run in the same VM version as the production code to make sure we didn’ thave any compatiblity issues and I totally agreed. Luckily, by the time he pointed this out, I had found my ultimate solution. **

Idea #1: Configure the Maven compiler plugin to use Java 5. Simple, and effective. Too effictive. This will change the settings for both the compiler:compile and the compiler:test-compile goals. No go.

<plugin> <groupId>org.apache.maven.plugins</groupId></pre> <artifactId>maven-compiler-plugin</artifactId> <configuration> <source>1.5</source> <target>1.5</target> </configuration> </plugin>

Idea #2: Somehow set test-compile specific properties for Maven, allowing the normal compile goal to function as it had and the test-compile to use Java 5. This might have worked except for two things. 1) We would have had the issue that our unit tests were no longer compiled the same as our code and 2) I could never get it to work. Despite seeing what appeared to be test-compile specific source and target properties here and here … I could never get it to work. No Go.

Idea #3: Find a way to do what I did in Idea #1, but ONLY for the test-compile goal. This one seemed straightforward but it took quite a bit of surfing to finally find an example of how it might be done. A great Codehaus.org article included an exact example of what I needed to do prompted by the exact same issue. The solution used different execution profiles for the compiler plugin to achieve what I wanted. And since I the default settings were fine for the normal code compile, my configuration was even simpler. Works (except for the unit tests in Java 5 issue).

<plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <executions> <execution> <id>java-1.5-compile</id> <phase>process-test-sources</phase> <goals> <goal>testCompile</goal> </goals> <configuration> <source>1.5</source> <target>1.5</target> </configuration> </execution> </executions> </plugin>

Idea #4 (What I ended up doing): Create totally separate module to be the home for UATs. After I solved my problem with Idea #3 and a few other unrelated issues crept up I decided something needed to change. I wanted to tests integrated with the rest of their code so that anyone on the team could/would actually write and run the tests. The final solution was to move the UATs out of the main development project and into their own. The client’s development teams had already decided on a multi-module Maven project aproach already and this seemed like a natual evolution. We created a separte UAT’s Maven module which along with the main code project and some other misc sub-modules were rolled up under one parent pom.xml for the project. Under the scheme I could use Idea #1 to set the compilation source and target for the UATs project to 1.5 across the board without worrying about screwing up the compilation of the main project or its unit tests. Done.

TestNG Group Gotcha

I have been forcing myself to use TestNG lately because its what my current client uses and to try something new. Overall I have been happy with it, but I don’t think I will be switching from JUnit any time soon on projects where I have a choice. Yes, TestNG has several features which JUnit doesn’t, but JUnit 4 made up a lot of ground from when TestNG was first released in response to the shortcomings of JUnit 3. Plus, a lot of the features I just don’t think I would use very often.

One feature that did make a lot of sense to me was the idea of test groups. Basically, the @Test annotation allows you to specify any number of strings as group names that the given test will belong to. Then, when you want to execute a test suite, you can specify which groups are included or excluded from your suite. Sounds simple enough and would allow you to divide up your test executions if you wanted to. So I gave it a shot.

I wrote up a new test, gave it a label of “integration” (distinguishing it from my normal unit tests) and tried to run it. ERRRR. NullPointerException. After several minutes verifying I had things setup correctly I realized the problem was that my @BeforeSuite and @BeforeMethod methods were not being called. But the the test was working a minute ago …. a quick check of the docs revealed that all of the @BeforeXXX and @AfterXXX methods ALSO can have a groups setting. Meaning that when I told TestNG to run just the tests with a group of “integration” I was really telling it to only execute methods with that group.

This wasn’t the behavior I was expecting, but at least I understood what was going on after I read the docs. If I added the group to my support methods, everything would be fine. Only one problem. The @BeforeXXX and @AfterXXX methods in question are in a base class and shouldn’t need to know about any groups a test class wants to use.

Enter the alwaysRun annotation property. According to the TestNG docs:

For before methods (beforeSuite, beforeTest, beforeTestClass and beforeTestMethod, but not beforeGroups): If set to true, this configuration method will be run regardless of what groups it belongs to.

For after methods (afterSuite, afterClass, …): If set to true, this configuration method will be run even if one or more methods invoked previously failed or was skipped.

By adding this to the base class, the correct methods are run each time. I am not 100% sure this won’t have other side effects that I haven’t found yet, but as it stands, it allows me to use groups in my tests AND use the base class to setup and teardown some of our common objects. Its not a perfect solution, but it works.

Remember the XPath Axes

Today I was having some trouble coming up with a Selenium XPath locator for a deeply nested selector with a dynamically generated ID. To make matters worse, there were no “near by” logical elements that this element was tied to that had an easy locator. The only clear thing I had was the label for the option I wanted to select. Not wanting to leave the test w/ an extremely brittle, not to mention long and unreadable, xpath locator … I remembered that I had solved this problem before.

Several times now I have wanted to use an XPath expression to find an element by its child element. Queue XPath axes.

Since I have forgotten the syntax and usage of axes several time nows, here I post the solution for posterities sake. If anyone has a better solution to this issue, please let me know … but this seemed to work well enough.

XPath axes allow you to specify a “location step” from a[ny] specified element. What does this mean? We can select elements based on there location relative to another, and NOT just contained within it. They are specified by an “axis specifier” followed by :: and an element selector. Example:

In order to select a SELECT element by one of its OPTIONs:

//OPTION[text() = ‘Choice Text’]/parent::SELECT

This XPath selects the SELECT element which is the parent of any OPTION elements containing the text node with a value of ‘Choice Text’. While not as simple as a locator directly to the desired element based on its ID, this expression is composed only of elements we actually care about with regard to the test (the option we want and the selector its in) and is more readable than a 50+ character expression going back to the root element and containing numerous indexes.

XPath axes can select a number of things including an elements children, ancestors, siblings (preceding and following), etc.

For the next time I forget the syntax, ZVON has a nice site with examples of various axes and there syntax and Greg Collins has a nice post on how the sibling axes work.

Also, at least in XPather, an expression that would otherwise return the same element more than once through an axis, only returns the unique list of elements (e.g. //div/ancestor::* will return the root element only once and not once per DIV found)

12 comments